Scrapbook transcription process

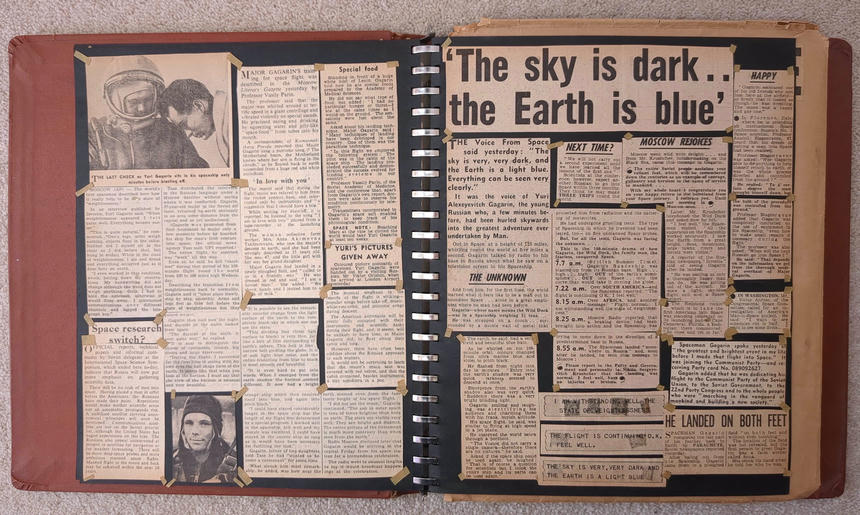

I inherited a couple of scrapbooks full of newspaper clippings from the Space Race of the 1960s, mostly from British newspapers (including the Evening Standard, Daily Mail, Daily Mirror, and Daily Sketch). Unfortunately they are suffering from some physical deterioration — in particular the sixty-year-old sticky tape holding most of the clippings onto the pages has turned into unsticky tape, which makes it impossible to turn a page without half of it falling off — so I thought it would be nice to try preserving them digitally.

The results can be found here. This post gives a little detail on the process, which is mostly quite boring.

I didn’t have access to a scanner or decent camera when I started, so my first attempt was to simply photograph each page with my phone. Not great quality, but it’s just about readable. Then I wanted to OCR it, to make the text more readable and searchable.

I started with AWS’s Textract. (There may be better tools but this worked okay for me so I didn’t look any further). It’s fairly easy to use if you’ve already got an AWS account set up: upload a JPEG to S3, then run:

This returns lists of LINEs and WORDs, each with bounding boxes:

,

,

(I did this before Textract introduced the layout feature, which groups lines into paragraphs and would have helped here.)

The API documentation says “The minimum height for text to be detected is 15 pixels”. But in my crude experimenting, what really matters is the size of the text relative to the image: in many cases it failed to detect any text in the full photos, and scaling the image did not help, but it detected pretty much all the text when cropped tightly to a single page. So I manually cropped every page from the photos and fed them each through Textract.

That worked pretty well despite the low quality of the photos.

The most common errors were confusing i, I and l (it was particularly fond of writing Yurl Gagarin),

bad punctuation (. vs , and " vs '),

and understandably missing or mistaking words that are barely legible through stains on the paper.

Occasionally it appeared to only detect in a sub-region of the image,

missing the leftmost few characters from an entire column, for no obvious reason.

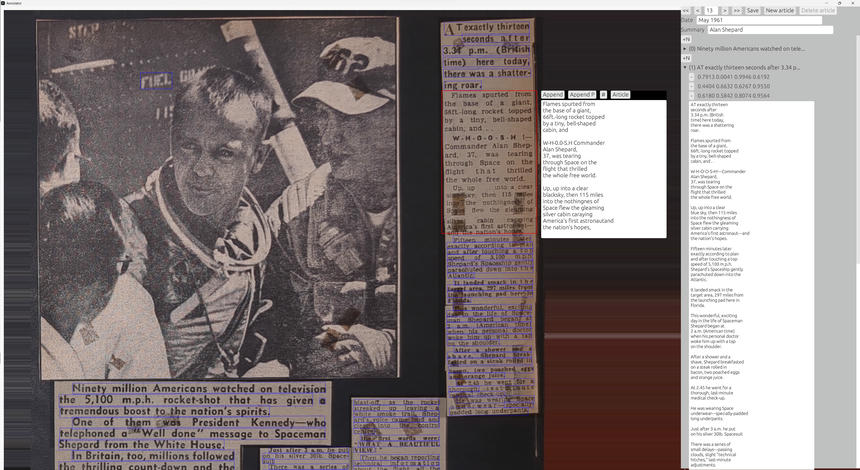

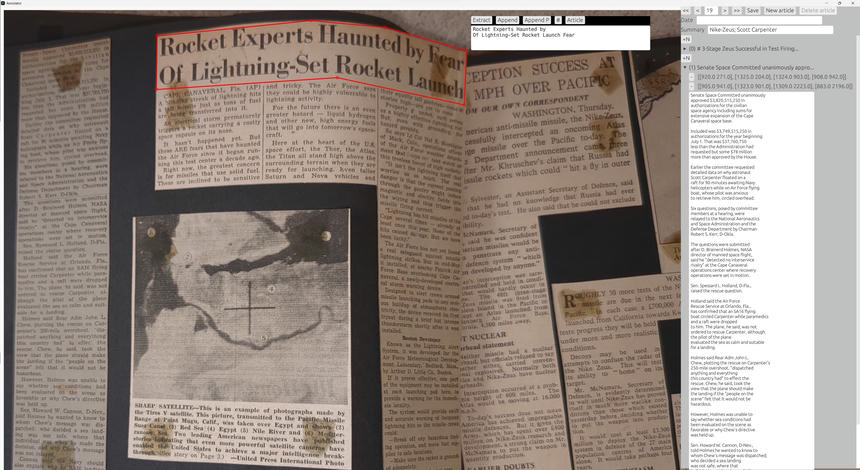

The next issue was rearranging the detected lines of text into columns and articles. I assumed it was unlikely there was a tool that could reliably do this automatically, as the layout of some pages is quite non-obvious, so it was always going to need some manual work. So I wrote a (very hacky) tool, using Rust and egui, to help with that:

This displays a page’s image, plus the bounding boxes of all the detected lines. You can drag a box around a column of text and it concatenates all the lines, with some heuristics to dehyphenate the text and to insert paragraph breaks where it detects an indented line; then it displays a text box beside the selected column, so you can compare the text and manually fix any OCR errors or formatting errors. Then you can click some buttons to append the column to the current article or start a new article. Repeat for every article, then repeat for every page.

This was far from perfect and still took a lot of manual effort to improve accuracy, but it basically worked for the first scrapbook.

The second scrapbook had some denser layouts of articles (meaning Textract would more frequently interpret two adjacent lines as a single indivisible line), plus it was harder to lay the pages flat (I didn’t want to remove the string binding the pages together because I wasn’t confident I could put it back together again) so some of the photos are quite wonky.

In an attempt to get more accurate results from Textract, I tried a new approach: instead of converting the whole page at once, now the tool lets you select the polygonal outline of a single column or headline, which gets cropped and sent to Textract through the aws-sdk-textract API. Since Textract charges per API call, this does make it more expensive – but it’s about $0.0015 per call, so at this scale it doesn’t matter.

Textract seems to cope fine with linear transformations (e.g. rotation or shear) of the image, but struggles when it’s non-linear (e.g. curved pages where lines are not all going at the same angle). In that case it helps to manually select and transcribe the column as a series of small nearly-uniform parts and concatenate the text, rather than doing it all at once.

Textract was a lot more accurate for the second scrapbook, probably because the photos were taken from slightly closer so the text was larger and clearer.

After finishing both scrapbooks, I found an envelope containing many loose cuttings about Telstar. They were easy to place on a flatbed scanner, so I started with much higher-quality images that made Textract much more reliable (especially for punctuation), but otherwise the process was the same.

The code for the annotation tool is available on GitHub, though it’s designed specifically for this project and is probably not of any wider use.